article

AI Search: Delivering great answers to inquiring consumers

Our new AI-powered search improves knowledge sharing

October 24, 2022 • 8 minutes

At LivePerson, we know our brands have accumulated a wealth of knowledge to share with their consumers. Those consumers often ask questions like:

- What’s your return policy?

- How can I change my address in my account?

- Do you allow pets on flights?

Helping our brands capitalize on and scale their knowledge — so they can provide relevant results instantly for an optimal customer experience — is one of LivePerson’s key goals. That experience might be one-on-one with an agent or an automated conversation with an AI chatbot, powered by our Conversational AI. KnowledgeAI, LivePerson’s knowledge base software solution, meets this need, and our new search service makes it even better.

Meet the knowledge base software

KnowledgeAI allows brands to ingest or “hook up” their content so that it can be retrieved via searches during automated conversations between consumers and bots, and during chats between consumers and agent users. In the latter case, the content is “recommended” to the agent based on the consumer’s message, then the agent can choose to send it on to the consumer. In both cases, the consumer gets the quality answer they need, quickly. This improves the chat system’s throughput tremendously.

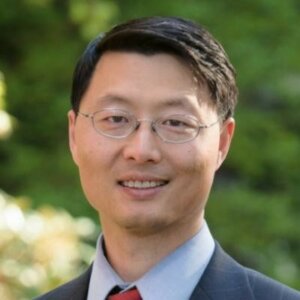

Our KnowledgeAI offering has two major components. The first lets a brand bring its public content or internal documents into the knowledge base, in an automatic or semi-automatic manner (content review is usually required to guarantee quality and scope). The second component retrieves the relevant knowledge base articles — the answer to the consumer’s question.

Introducing AI Search

AI Search is KnowledgeAI’s new advanced, out-of-the-box search service based on the latest research in deep semantic searches. It requires no setup. Just add your content to the knowledge base system, hook it up to a bot or expose it to your agents, and go. In this first version of AI Search, we’ve focused on its search capabilities. But we won’t be stopping there! There’s more to come.

Wondering what’s under the hood?

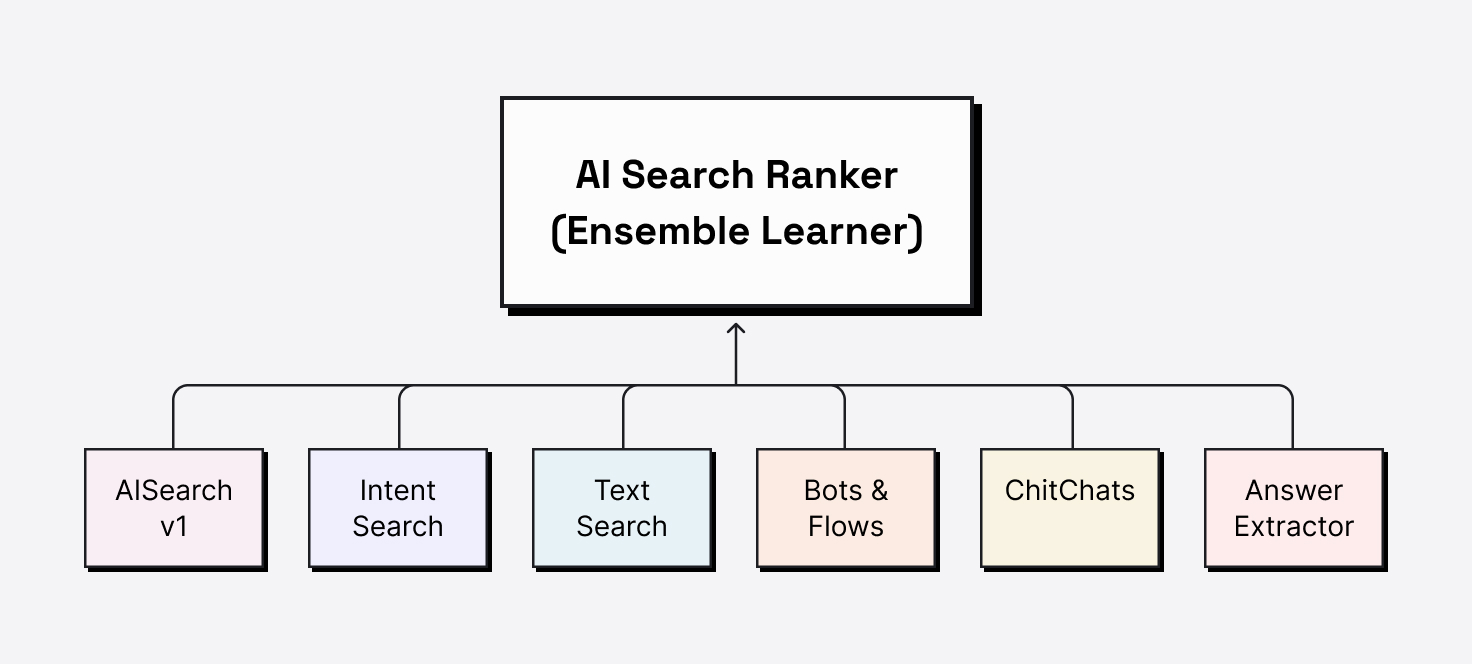

AI Search leverages state-of-the-art, transformer-based language models, condensing the various meanings and usages into neural embeddings as vectors. It conducts a k-nearest neighbor search in the semantic space and finds the most similar articles that can answer the consumer’s question. Because it is based on language models that were trained with vast amounts of data and were tuned with question-answering capabilities, it gets to the meaning of words, and handles homonyms and synonyms well. With sentence-transformer at its core, it is also context-aware and can understand the whole sentence.

Testing things out

Based on our research, we expected our new AI Search to outperform our existing Text Search — by a lot. However, ever data-driven, this required testing, particularly in real conversational scenarios. Naturally, we wanted to see how it performs in user chats, over multiple domains, and across brands. This called for detailed quantitative analysis.

To measure AI Search, both in an absolute manner and against other search engines, we needed to have “golden datasets” that were high quality, large enough to make the results statistically significant, and pertinent to the domain. But getting a dataset like this wasn’t easy.

Sure, we could have used public datasets, but public datasets (SQuAD, Yahoo Answers, Movie Dialog corpus, ConvAI2, etc.) are mostly standard question-answering or chitchat datasets. They differ significantly from the actual task-oriented conversations that occur on LivePerson’s Conversational Cloud. So, we couldn’t use public datasets to achieve our goal or accurately evaluate how our AI-powered search performs. We had to invest in the creation of LivePerson golden datasets.

Getting great data

Fortunately, LivePerson’s taxonomy team can create annotations. This gave us two, good-quality datasets to use for testing this technology:

- A paraphrase dataset that covers frequent questions in six common domains (industries). We used it to compare AI Search and Text Search: How did each handle paraphrases when searching internal knowledge bases?

- A query-answer dataset that uses real consumer queries from our production environment and real knowledge bases from our brands, all evaluated with 5 + 1 levels of relevance ratings. The five levels were Poor, Fair, Fair+, Good, Very Good, with one additional level that was computed: Perfect/Exact Match. Each query-answer pair was annotated by at least three human annotators. We also computed Fleiss-Kappa scores to make sure we got adequate agreements among these annotators.

After running real production queries against the datasets and collecting answers, we computed the metrics. The results were exciting.

Getting even better results

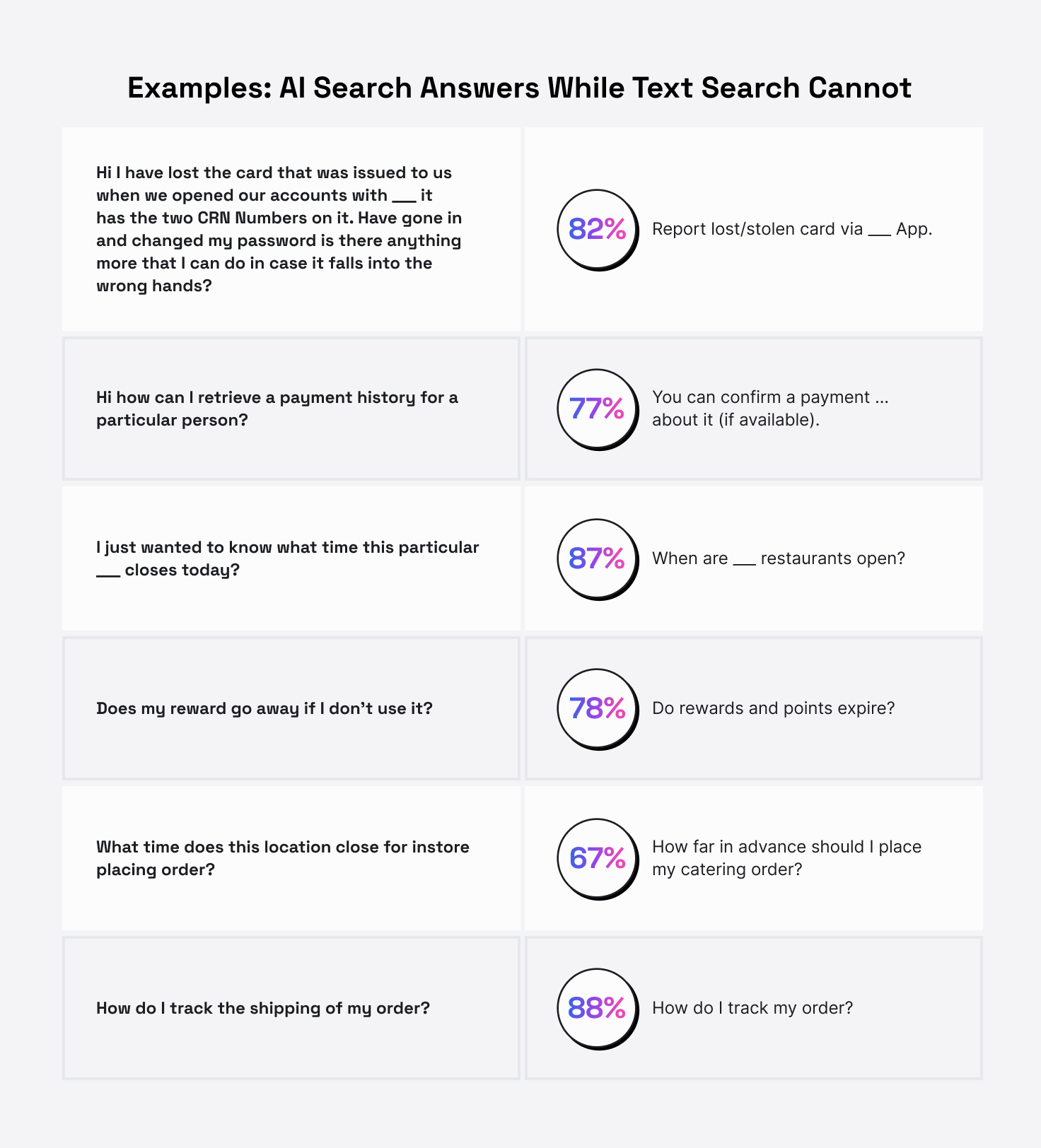

Our empirical studies validated our expectations. In fact, among all the tests we did, we didn’t find a single case where AI Search delivered worse results than Text Search. The result for AI Search was always equal or better. We also learned that AI Search can often perform as well as or better than an Intent-based Search, which requires some degree of setup (i.e., training phrases that are manually added).

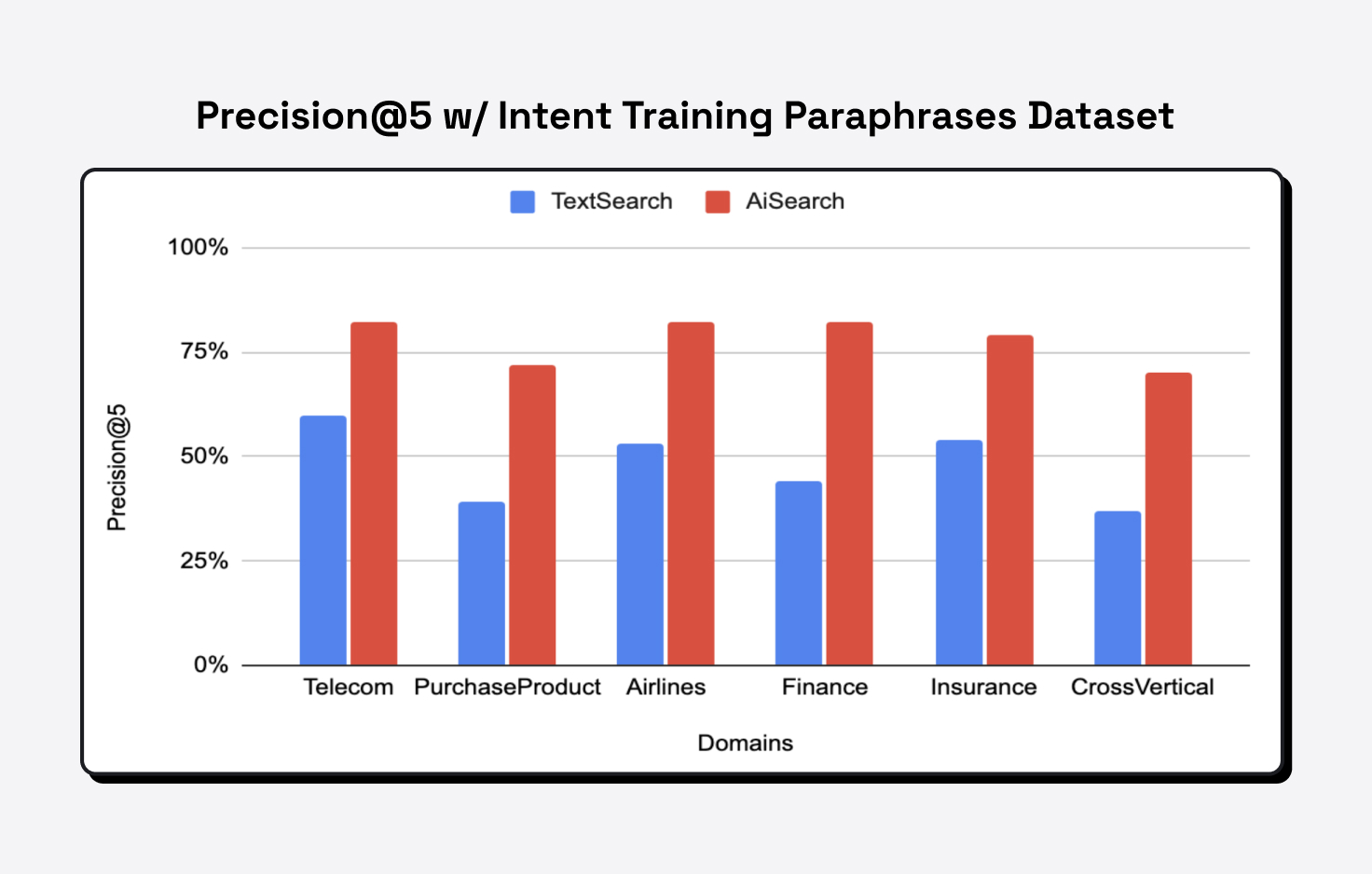

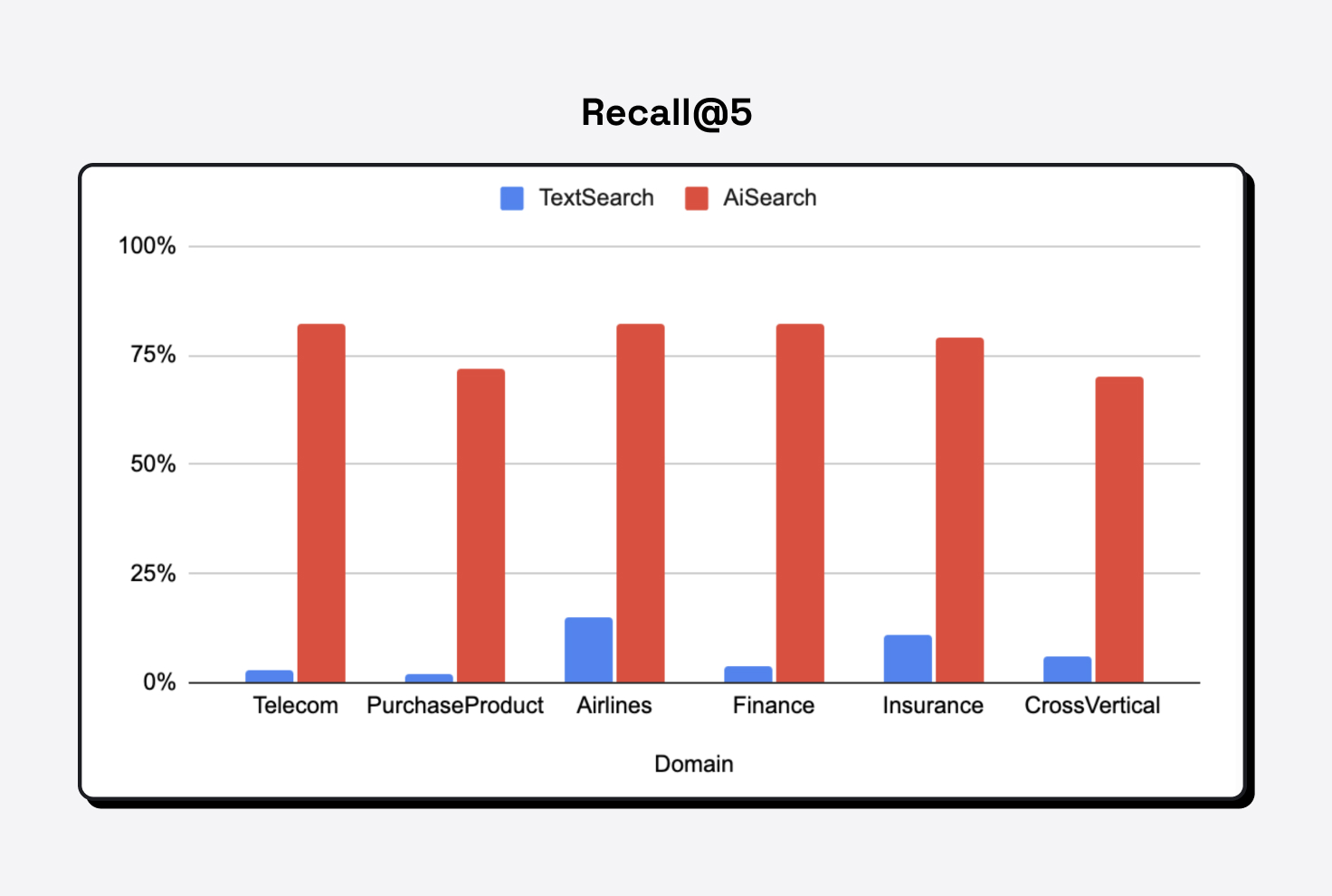

As predicted by theory and expected from our research, when compared to Text Search, AI Search nearly doubles the precision and multiplies the recall in the paraphrase task.

This means that AI Search returns answers that are almost twice as likely to be relevant or helpful. At the same time, AI Search is many times more likely to find the answer in the knowledge base (if one or more answers exist).

We kept going. We used our annotated golden dataset to also take a look at true-false positives and precision recall. We found that AI Search can increase the true positive count a lot while reducing false negative rate substantially over Text Search.

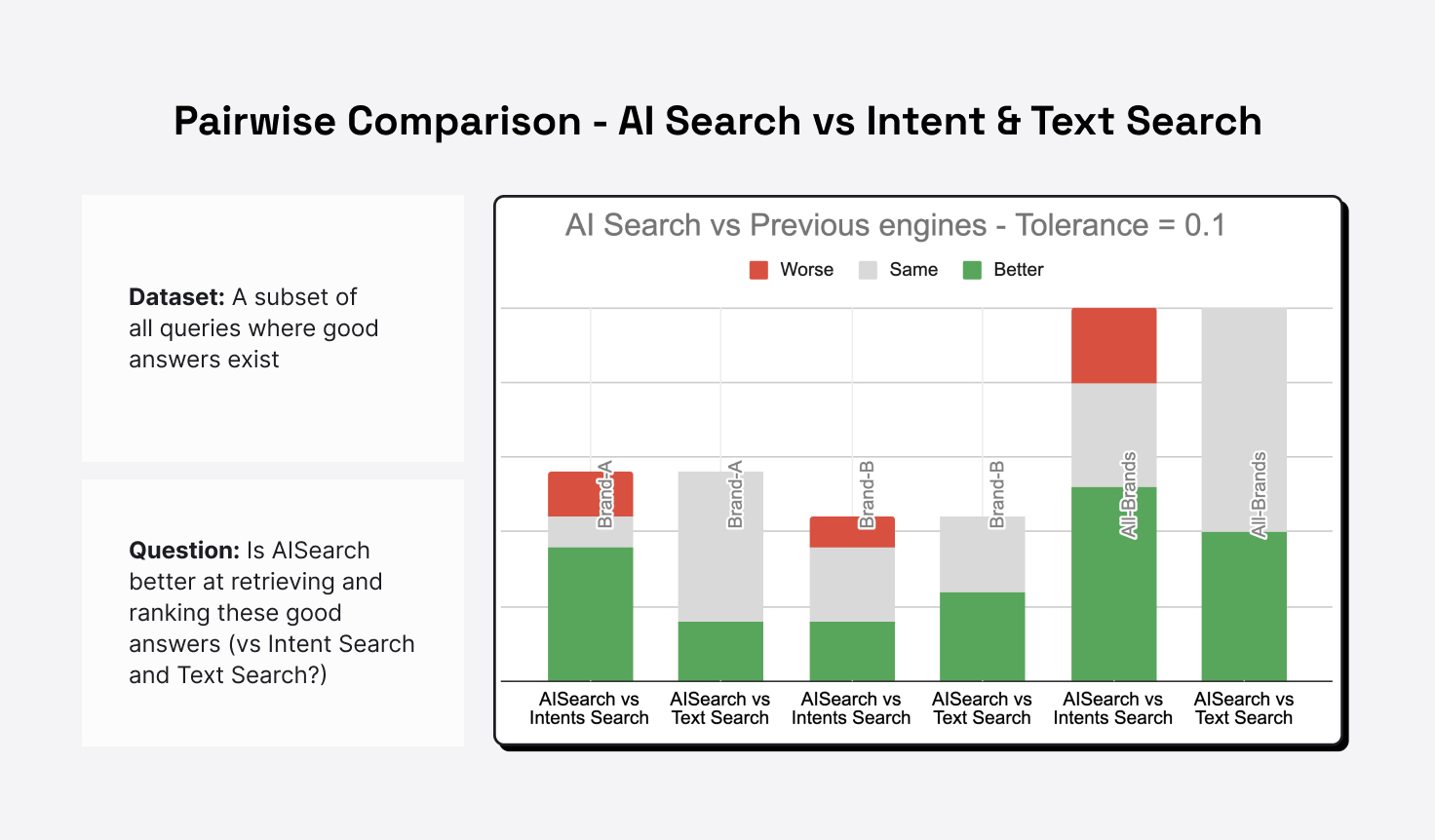

We didn’t stop there. We did pairwise comparison (a form of A/B testing) to show exactly how each search engine answers consumer queries using brands’ knowledge bases. In this study, we compared each engine’s scores against the “ground truth” (the average of annotators’ ratings). For questions with relevant answers in the knowledge base, if one search engine’s score was clearly higher than the other engine’s score, we regarded it as better.

For example, for a great query-answer pair, if AI Search scored an answer as 0.73 and Intents Search scored the same answer as 0.89, the 0.16 difference is smaller than the margin (tolerance) of 0.1. So, we regarded the Intents Search as better. If AI Search’s score was 0.83 or 0.93, the difference would be smaller than 0.1, so we considered them the same.

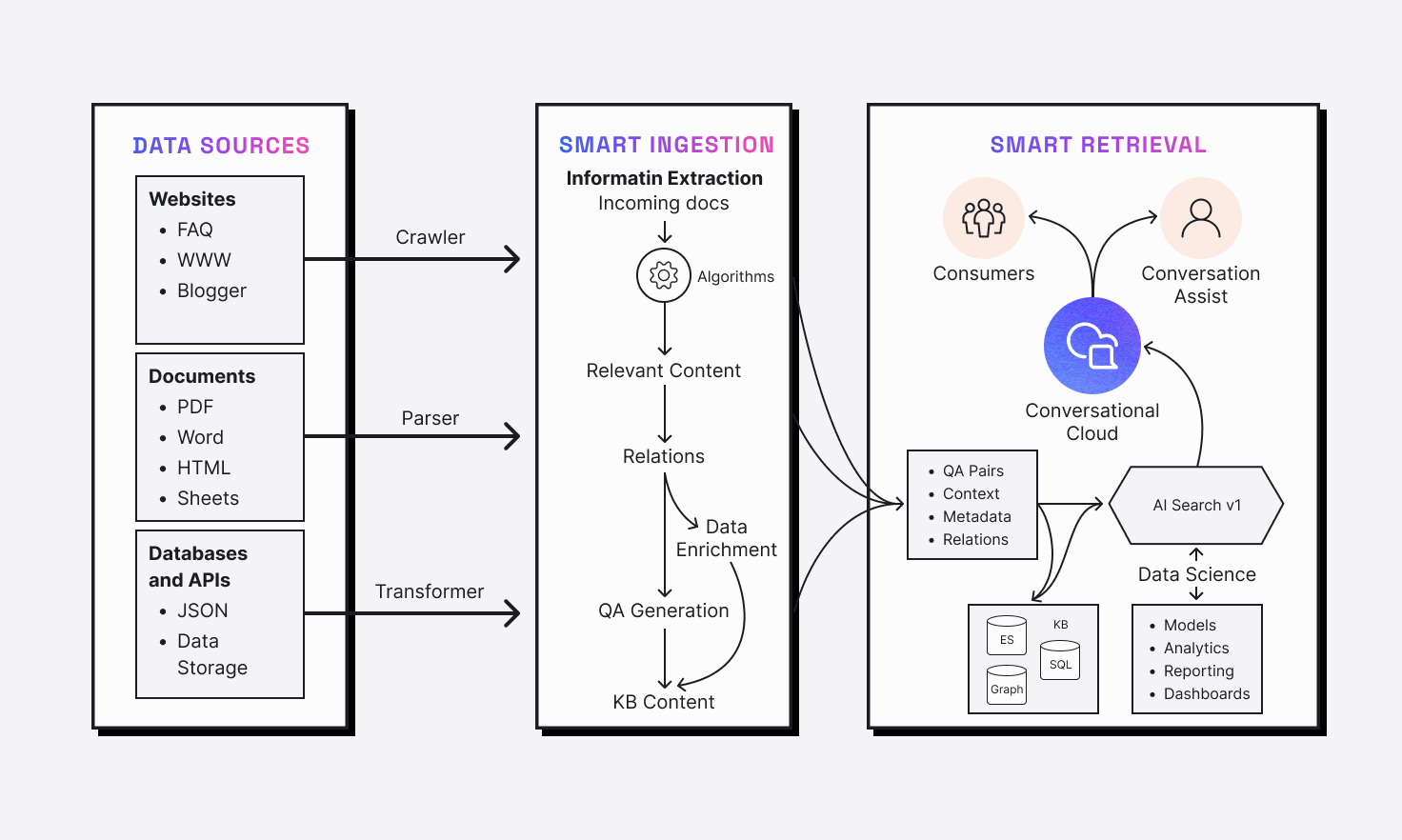

Our pairwise comparison study showed AI Search clearly outperforms Text Search. It even has a slight overall advantage over Intents Search, which requires intensive training.

What to remember when leveraging this AI-powered search

1. It’s easy to use

AI Search presents a great opportunity for brands to quickly make the most of their accumulated knowledge. Integrate your internal knowledge base, the support info on your website, an FAQ page, a PDF document, and more. Just add your content, hook it up to a bot or expose it to your agents, and go. Some of the best news about AI Search is the fact that it works well right out of the box. No setup of the search feature is required.

2. It benefits from high-quality knowledge bases

As you get rolling, keep in mind that AI Search performs better with large, high-quality knowledge bases that have broad, diverse coverage.

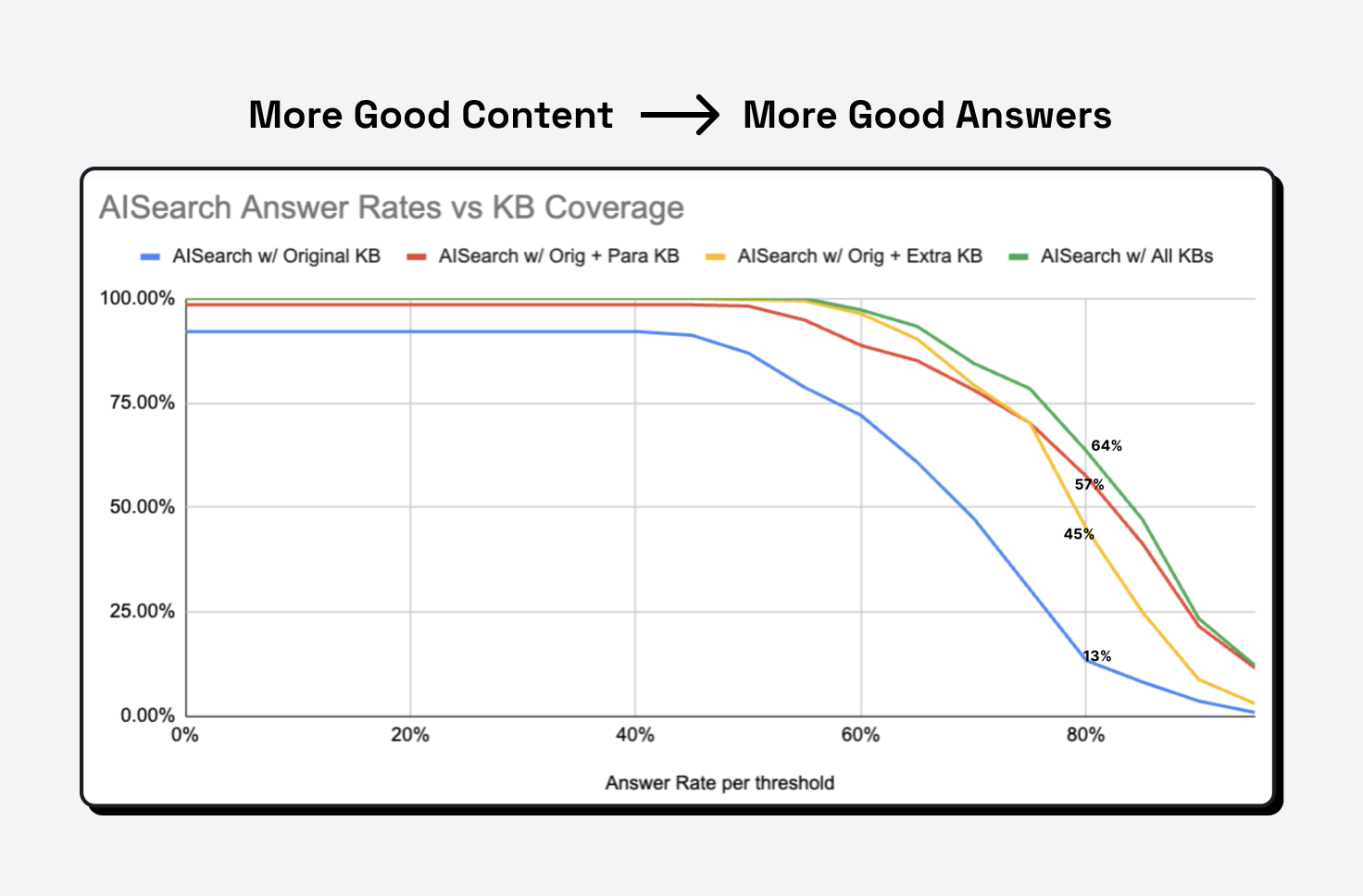

The chart below shows AI Search’s answer rate for various knowledge bases. The data reveals that the more articles there are, the higher the answer rate is. This means more queries will be answered and will have a higher relevance score.

3. It’s a good fallback for Intents Search

AI Search can make up for issues that can occur when using LivePerson intents. The training of intents can be difficult, labor intensive, and is performed by humans, so an intent model can be difficult to scale. Moreover, the model might have some flaws, like gaps and/or overlaps in the coverage.

If you’re using Intents Search, then using AI Search as a fallback search is a good strategy to address issues like these. The two searches are better together.